Introduction to Voice Interactions

Lessons from Building an Alexa Skill

Dissecting vocal instructions programmatically

Did you ever wonder how Amazon’s Alexa or Apple’s Siri understands what you are saying and then knows how to respond meaningfully to your request?

We had some general ideas of how that works based on our understanding of natural language understanding and automatic speech recognition technologies, but until we actually built an Alexa Skill for one of our clients, we really didn’t know the mechanics of how voice experiences (VX) are built.

What is a conversation?

Let’s be honest. You’re not really having a conversation with Alexa or Siri. It is a conversation-like interaction with a computer application that can process human language and return a response from a pre-set list of options. Regardless of how polite and courteous we program the app to be, it is really an interaction in which you are issuing commands to a computer. It is a dialogue only in the sense that it mimics the “call and response” of conversation, but it is not really a conversation.

A real conversation between two free-thinking beings allows for either party to control the direction of the conversation. No one wants to listen to Alexa digress for twenty minutes into a rambling story about itself when you just want to know what the weather will be today. We own these digital assistants because we want them to perform tasks for us — not because we want them to be companions (at least not yet).

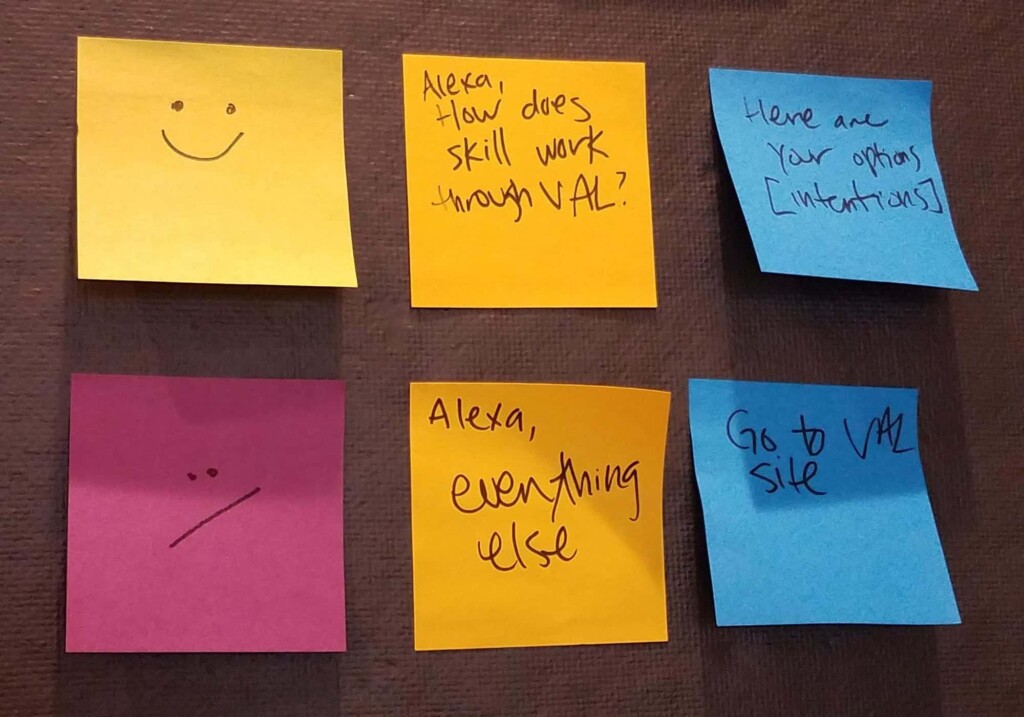

Voice experiences are pre-organized and pre-planned

In order for vocal instructions to be interpreted by a computer program, the process for interpretation must be systamically defined. Amazon provides some clear instructions for the process Alexa will follow to interpret vocal directions with respect to the Skill you are developing, and more precisely, its content. Google has its own instructions for developing Actions that work with Google Assistant.

All voice requests (regardless of platform) follow a similar conversation paradigm or model for how interactions are structured. They all rely on three basic components:

- Intent

- Utterances

- Responses

Voice assistants take the raw speech from your request and break it down into these common building blocks with their proprietary NLUs. Then those extracted components are passed to the developers’ app where a custom response is built and returned to the user.

Intent is essentially the end goal of the person issuing the command. It’s what you want: you want to know what the weather will be, you want to order a pizza, you want to listen to music, etc.

Utterances are all the possible ways you may ask for the thing you want. This is where things get interesting from a linguistic point of view. How you ask for something you want can vary dramatically based on the individual asking the question. As designers of voice apps, we have to account for this variety of potential inputs. “I want to order delivery. I want take-out. Have a pizza delivered. I want Dominos.” These are just a few ways we may ask for something we want.

Responses are all the possible answers, confirmations, or follow-up questions the voice application may return based on how it understands your Intent and your Utterance. A well-programmed voice application will choose from a list of Responses that help advance the interaction in a meaningful way, such as, “I understand you want to order food for delivery. Here are three restaurants who deliver to your location,” or, “I heard you say you want Dominos. I can read you the menu options or take an order directly if you know what you want.”

A code snippet of the ways a user may ask about winning numbers from the lottery:

“samples”: [

“tell me the jackpot for {lotteryGame} and {lotteryGame}”,

“what is the current jackpot “,

“what is the largest jackpot coming up”,

“what is the largest jackpot”,

“what is the prize”,

“what is the prize”,

“how much can i win”,

“what is the jackpot”,

“what are the winnings for {lotteryGame}”,

“{lotteryGame} winnings”,

“{lotteryGame} jackpot”,

“{lotteryGame} payout”,

“{lotteryGame} prize”,

“what are the {lotteryGame} winnings”,

“what is the {lotteryGame} winnings”,

“what is the {lotteryGame} prize”,

“what is the {lotteryGame} payout”,

“what is the {lotteryGame} jackpot”,<

“how much is the payout for {lotteryGame}”,

“how much is the prize for {lotteryGame}”,

“how much is the jackpot for {lotteryGame}”,

“how much can i win if i play {lotteryGame}”,

“what is the prize for {lotteryGame}”,

“what is the jackpot for {lotteryGame}”,

“what is the payout for {lotteryGame}”

]

Words, words, words

With these basic elements, you can begin to see how the “conversation” is structured. It’s entirely pre-planned – or more accurately stated, it is pre-written. The application does not generate the words it speaks to you. Every question, clarification, and answer it provides is written into the code.

In short, building a voice application is as much a copywriting exercise as it a programming exercise. In fact, you may argue that it is almost entirely a copywriting activity. Given that the interface is made entirely of language (as opposed to visual graphics), user experience for voice is entirely about thinking of all the ways a human may ask a question or state a need, and then how that person will understand and act upon an automated response.

For interaction designers accustomed to working with visual interfaces, switching over to design voice interactions can be daunting because a very different set of rules dictate how information is shared. As visual designers, we have the luxury of all the nuance and contextual clues visual elements can provide. Most people know what a menu looks like, what a button does, where they “are” in terms of location whether physical or virtual, etc. We take these things for granted in an experience that has visual guides, but that all disappears when there is only vocal interaction.

Brave new world

Having built an Alexa Skill and beginning to become more involved in voice experiences, it is obvious to us that voice experience is going to be a critical aspect of our technological future. Given the rapid adoption of smart speakers and similar devices in homes and businesses across America, voice experience is already becoming a part of our everyday lives. We believe that trend will not only continue, but that voice become a part of every digital experience we encounter – not just limited to smart speakers and smartphones.

This is a fundamentally new world, and we’re excited to be a part of it.

This post is part of a series of blog posts COLAB is writing about our exploration of voice experiences. Stay tuned for more!